Drones over Gaza: The lethal consequences of incorporating machine learning models into military UAVs, and other implications for occupied Palestine

by Sean McCafferty

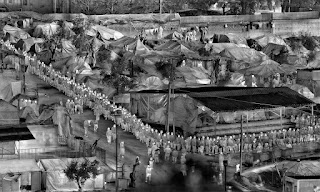

Operation 'Guardian of the Walls', May 2021 (Wikicommons)

The proliferation of Unmanned Aerial Vehicles (UAVs) - particularly loitering munitions - represents a significant development towards Lethal Autonomous Weapon Systems, demanding deeper reflection on the military deployment of machine learning. Most military UAVs are semi-autonomous systems and need human oversight for lethal action, yet the development of autonomous systems is already a reality. The UAVs developed by Israeli Aerospace Industries and Elbit, for instance, can be deployed with varying levels of autonomy and are already being used by Israel in occupied Palestine. Technologies such as deep neural network image classifiers, which, when incorporated into UAVs allow for automated target recognition systems, also increase the possibility of inaccurate violence and human fatalities.

This article looks at the incorporation of deep neural network image classifiers into the automated target recognition systems of military UAVs and their ability to perform accurately when presented with unfamiliar data during deployment. It then reflects on the use of automated UAVs in occupied Palestine, suggesting that the margin for error of this technology is unacceptable for military application. The focus on Israel is necessary as it is a tactically advanced user and developer of military UAVs. Indeed, Israel’s deployment of automated UVAs is likely to expand the violent asymmetry of the occupation of Palestine, produce more inaccurate violence, and force the development of new forms of Palestinian resistance.

Loitering Munitions

The loitering munition is an UAV that, when launched, ‘loiters’ over a predestined area using its automated target recognition capability to observe the target area. If it identifies a predetermined target, the loitering munition will then fly into the target detonating its explosive charge. Israeli Aerospace Industries developed the first loitering munition in the 1980s - the Harpy - designed to use automation to target radar and anti-aircraft defences. Even with the development of deep neural network-led automated target recognition, until recently almost all loitering munitions relied on a human ‘in the loop’ to ensure oversight and proportionality. Therefore, the recent proliferation of loitering munitions alongside the integration of deep neural networks to lead everything from flight patterns, image classification and swarming, represents a growing offensive capability with the possibility of full autonomy. Loitering munitions appear to have already been deployed in Yemen, Nagorno-Karabakh, and notably Libya, where it is alleged that a STM-Kargu drone carried out the first fully autonomous attack against human targets.

As industry development moves toward greater munition autonomy, the question of human supervision and control of lethal action looms large. Likely, loitering munitions have been and will be trained to identify a variety of targets including vehicles and humans. Their platforms have diverse designs, ranging from smaller quadcopters to more conventional fixed delta-wing models - with modern Israeli designs such as the Harop, Orbiter and SkyStriker, earning the moniker of ‘Kamikaze Drones’. Loitering munitions also appear to have had a profound impact on the outcome of the war between Azerbaijan and Armenia in Nagorno-Karabakh, and current models have the capability to search for, identify, and engage targets on their own without any human in the loop.

Deep Neural Networks and Image Classification

UAVs often rely on object recognition through automated targeting recognition systems. These systems have existed since the 1970s, relying mostly on automation rather than on autonomy, and using pattern recognition through software that is programmed to recognise targets based on manually predefined target signatures. The incorporation of machine learning, and particularly deep neural networks, has vastly expanded the accuracy and scale of pattern recognition and increased autonomy.

Deep neural networks discover intricate structures in large data. During this process, the internal parameters of a machine learning model are adjusted to improve its output, in this case the accurate classification of images. Deep neural networks contain multiple hidden statistical layers that improve the classification process. After this process is used on training data, the deep neural network is measured on a new set of data drawn from the same source, called a test set. This serves to test the generalisation ability of the machine; that is, its ability to produce accurate answers on new inputs not seen during training. Deep neural networks have brought about breakthroughs in processing images, video, speech, and audio. Such supervised learning relies on human input through the labeling of data to inform the process of backpropagation. For large datasets, significant manpower is required to manually label training data.

Unsupervised learning

There has been a shift to unsupervised learning for these deep neural networks as the desire to process more data has outstripped the capacities of human input. During unsupervised learning the deep neural network is trained on unlabelled data, further improving the scale of data that can be processed. In both supervised and unsupervised deep neural networks, the layers of representation that the machine identifies do not correspond to patterns that humans rely on to identify objects. As such, it becomes impossible to understand the layers of representation that the deep neural network is using to classify images. While this creates high effectiveness in classifying large amounts of data, it also represents significant frailty. Many deep neural networks become black box systems and the process of image classification becomes opaque. The application of these classifiers to sensors on UAVs creates a fatal lack of transparency in the targeting process.

A lack of awareness of the layers of representation that the deep neural network uses to identify targets for an automated target recognition system, along with a reliance on unlabelled training data, makes it impossible to match the process with human norms of identifying a target. This discrepancy brings up a multitude of moral, legal, and operational questions about the use of this technology. Further, the accuracy of image identification is challenged when systems are deployed in dynamic or adversarial environments. For instance, their performance rapidly deteriorates as environments become more cluttered and weather conditions worsen.

Overall, deep neural networks are powerful tools for extracting correlations between all sorts of complex data, but there is no guarantee that these correlations are meaningful or that they correspond to actual causal relationships. Furthermore, deep neural networks often become black box systems, which prevents their inspection and control by human operators. For military applications, this is clearly a problem.

Failing Silently

It appears that relatively simple issues can confuse these image classifiers and cause their failure. Changing an image originally correctly classified in a way that is imperceptible to the human eye can cause the classifier to label the image as something wholly different. Further, it is possible to produce images that bear no resemblance to human criteria for image recognition yet they respond to the DNN’s layers of representation with 99% accuracy. Steps can be taken to improve generalisation when using test data to make a deep neural network more robust in terms of classifying new data and when facing potential adversarial attacks. However, margins for error in military deployment are far thinner than in many other applications of machine learning. Despite the possibility to make the deep neural networks that classify images for UAVs more robust, in their current state these systems retain the possibility of significant failures.

Airstrikes organised and controlled by human operators without the influence of deep neural networks and image classifiers already produce inaccurate and violent results, particularly in urban areas where 90% of casualties from explosive violence are civilians. From 2011 to 2020, Action on Armed Violence (AOAV) recorded 5,700 deaths and injuries from explosive violence in Gaza. Among the casualties, 5,107 (90%) were civilians; of these civilian deaths 60% were caused by airstrikes. On top of this, the use of drone warfare across the ‘War on Terror’ set a dangerous precedent of targeted killing, the criteria of which are so vague as to permit the targeting of virtually any able-bodied person who happens to be in a place where terrorists are suspected to be. These significant failures in the deployment of strikes without the use of machine learning are unlikely to be revolutionised by the deployment of machine learning models. Instead, these models may fail silently, producing inaccurate violence that can be easily justified or brushed aside by military authorities, which already subject suspect populations to a politics of death in their deployment of conventional airstrikes.

Operation Guardians of the Walls

Between the 10th and 20th of May 2021, the Israeli Defence Force responded to Hamas’s and Palestinian Islamic Jihad’s rocket attacks with an offensive operation inside Gaza. Machine learning capabilities, particularly the use of swarmed drones to provide intelligence and identify targets, were central to the offensive. Throughout the offensive, machine learning-led drones were experimentally deployed to identify and strike targets. An Israeli Defence Force paratroopers brigade support company, previously armed with mortars, was converted into a drone swarm unit. The swarm unit carried out more than thirty operations, some against targets many kilometres from the Gaza-Israel border. Machine learning capabilities were applied across a variety of intelligence and weapons capabilities and appeared to be central to the new strategy of the Israeli Defence Force. An Israeli Defence Force spokesperson claimed that a multidisciplinary centre was established to coordinate the use of artificial intelligence and machine learning capabilities “to produce hundreds of targets relevant to developments in the fighting”. The Israeli Defence Force already relies on machine learning-led UAVs for intelligence gathering and strike operations and is actively seeking to expand its use across its forces. Thus, the introduction of loitering munitions appears to be a question of time.

Airwars data shows that during the operation between the 10th and the 20th of May 2021, between 151-197 Palestinian civilians were killed and up to 744 were injured by Israeli airstrikes. The extent of civilian harm calls into question the suggestion that the incorporation of machine learning-led systems will increase accuracy. On top of the unrobust nature of automated target recognition systems led by deep neural networks, incorporating this technology into the existing framework of airstrikes and the myth of precision bombing means that this technology will only further civilian harm, as seen in "Operation Guardians of the Walls".

Resistance

These weapons systems and the DDNs - which lead their object recognition - are likely to face adversaries that will seek to take advantage of weaknesses and disrupt the effectiveness of their image classification. In asymmetric contexts, strategy has always been about exploiting your opponent’s weaknesses. For instance, the work of Su, Vargas, and Sakurai highlights that DNNs trained to classify images can become confused and that their output can be altered by low-cost adversarial attacks based on differential evolution, which changes a single pixel in images to render the classifier ineffective. Their results show that 67.97% of the natural images in Kaggle CIFAR-10 test dataset and that 16.04% of the ImageNet test images can be perturbed to at least one target class by modifying just one pixel with 74.03% and 22.91% confidence (on average). This displays the relative brittleness of deep neural network image classifiers in the face of low-cost adversarial attacks. Further, other counter measures may also prove effective with the deployment of Wi-Fi jamming, lasers to confuse sensors, counter-UAV weapons or deployment of camouflages and decoys. When faced with adversaries either in the form of manipulation of deep neural network training data or physical counter measures the accuracy of such intelligence and weapons platforms is likely to suffer either failing or producing more inaccurate violent results.

Repercussions in Palestine

Despite the significant brittleness in the deployment of machine learning-led systems, they are nevertheless being deployed across a variety of environments and will widen the asymmetric nature of the occupation of Palestine. Israeli loitering munitions are likely to proliferate with Israeli Aerospace Industries and Elbit as key manufacturers of these weapons. The Israeli state has often deployed experimental security measures against the Palestinian population to entrench its settler-colonial surveillance methods. As a result, machine learning-led intelligence and weapons platforms have expanded the capabilities of the Israeli Defence Force. Israel has already deployed UAVs in its offensive operations, as highlighted above. With such weapons systems, the responsibility for mistakes may be diffused. The lack of human oversight will allow military authorities to claim that decision-making is accurate and thereby prevent accountability for airstrikes when they are assisted or led by deep neural networks.

The material capabilities for violence held by Israel may force the evolution of techniques of resistance by Palestinians. When faced with state adversaries, non-state groups engage in a dexterous process of trial and error to evolve their methods of contention. Hamas has been developing a cyber warfare capability; it is likely that its military arm will be tasked with developing adversarial attacks and other counter measures. Groups like Hamas and the Palestinian Islamic Jihad are also attempting to deploy their own UAVs and loitering munitions; however, it is not known if they possess the capabilities to deploy them with machine learning targeting (though low-cost improvisation with this technology must not be discounted). These could be developed alongside low-tech physical countermeasures, either by established groups or by civilians unaffiliated with armed-resistance factions. In other words, the deployment of semi-autonomous and fully autonomous intelligence and weapons platforms led by deep neural networks will change the reality for occupied Palestine and bring further inaccurate violence. However, it is also likely that a wide array of methods of resistance will evolve to effectively challenge new forms of Israeli violence.

Conclusion

Ultimately, the incorporation of deep neural networks into automated target recognition systems, particularly on loitering munitions, represents a significant move toward Lethal Autonomous Weapons Systems. As identified above, the deep neural networks used for image classification have some inherent flaws. While extremely effective at processing large amounts of data, their lack of transparency and lack of robustness when processing new data leaves them with a margin of error that should be considered unacceptable for military applications. The incorporation of this technology into weapons platforms alongside the already flawed approach to airstrikes and targeted killing is likely to perpetuate inaccurate violence.

The Israeli Defence Force's increased use of machine learning-led technology in offensive operations will have consequences for the Palestinians. It appears that the use of this technology will deepen the violent asymmetry of the conflict and likely catalyse innovation in resistance methods. Machine learning has often been touted as a buzzword for progress, revolution, and sci-fi-like capabilities. What is clear even from a cursory investigation is that the military application of machine learning is more complex and nuanced than what its advocates suggest. As a result, it requires sharp reflection prior to deployment.

Suggested further reading

Atherton, Kelsey. “Loitering Munitions Preview the Autonomous Future of Warfare.” Brookings, August 4, 2021.

Boulanin, Vincent, and Maaike Verbruggen. Mapping the Development of Autonomy in Weapons Systems. Stockholm International Peace Research Institute, 2017.

Calhoun, Laurie. “The Real Problem with Lethal Autonomous Weapons Systems (LAWS).” Peace Review 33, no. 2. 182–89. April 3, 2021.

Chamola, Vinay, Pavan Kotesh, Aayush Agarwal, Naren, Navneet Gupta, and Mohsen Guizani. “A Comprehensive Review of Unmanned Aerial Vehicle Attacks and Neutralization Techniques.” Ad Hoc Networks 111. February 2021.

Grégoire Chamayou, and Janet Lloyd. A Theory of the Drone. New York: The New Press, 2015.

Scharre, Paul. Army of None: Autonomous Weapons and the Future of War. New York: W.W. Norton & Company, 2019.

Voskuijl, Mark. “Performance Analysis and Design of Loitering Munitions: A Comprehensive Technical Survey of Recent Developments.” Defence Technology, August 2021.

About the author

Sean McCafferty is a Master's student studying Security, Intelligence, and Strategic Studies at the University of Glasgow, Dublin City University, and Charles University. Sean holds an undergraduate degree in history and politics from the University of Glasgow. His research interests cover a broad range of topics on security and the MENA region. He is currently focusing on political violence and emerging technologies with a specific interest in how methods of violence evolve in the context of asymmetric conflicts.

Comments

Post a Comment